MomentsNeRF:

Incorporating Orthogonal Moments in Convolutional Neural Networks for One or Few-Shot Neural Rendering

- Ahmad AlMughrabi Universitat de Barcelona

- Ricardo Marques Universitat de Barcelona

- Petia Radeva Universitat de Barcelona

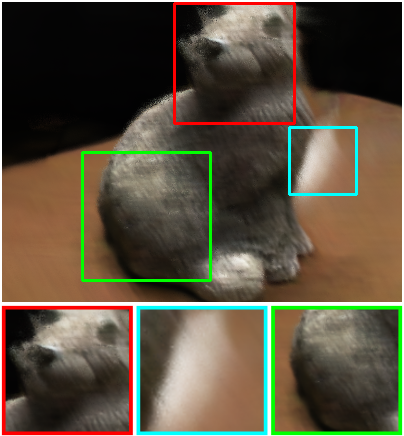

PSNR=25.624 SSIM=0.792 LPIPS=0.403 DISTS=0.235

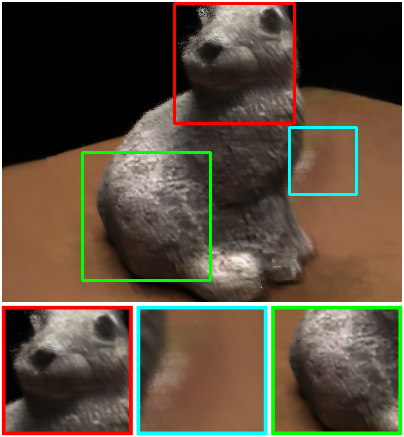

PSNR=28.501 SSIM=0.83 LPIPS=0.336 DISTS=0.175

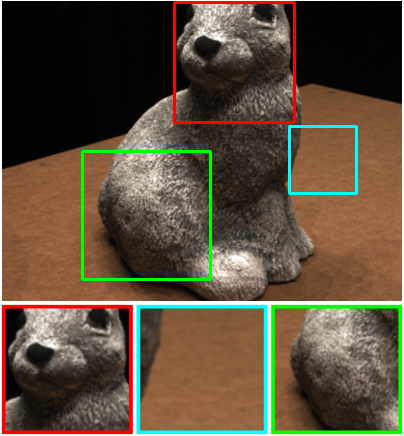

Qualitative comparison on DTU dataset. We show novel views rendered by PixelNeRF and MomentsNeRF compared to the reference in 3 views settings for scan114 scene.

Abstract

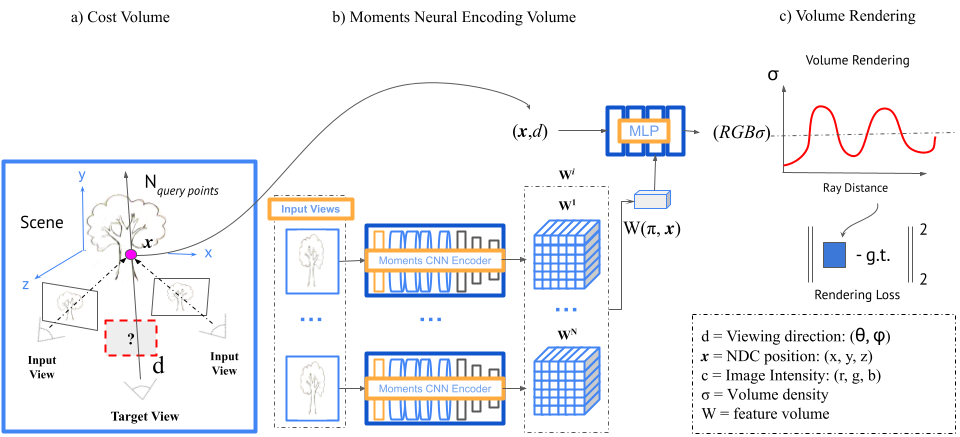

We propose MomentsNeRF, one and a few-shot learning framework that predicts a neural representation of a scene using Orthogonal Moments. Our architecture offers a transfer learning method to pre-train on multi-scenes and incorporate a per-scene optimisation at test time using one or a few images. Our method leverages transfer learning which learns from features extracted from orthogonal Gabor and Zernike moments. Our approach shows a better performance in synthesising the scene details in terms of complex texture and shape capturing, noise reduction, artefact elimination, and completing the missing parts compared to the recent one and a few-shot neural rendering frameworks. We conduct extensive experiments on real scenes from the DTU dataset. MomentsNeRF improves the existing approaches by 3.39 dB PSNR, 11.1% SSIM, 17.9% LPIPS, and 8.3% DISTS metrics. Moreover, MomentsNeRF achieves state of the art performance for both, novel view synthesis and single-image 3D view reconstruction.

Our proposed framework diagram, which outlines the entire workflow from a set of videos to any NeRF-like application. The data representation used in the diagram consists of four input videos that were taken from the Nutrition5k dataset

Rendering

Rendered images from PixelNeRF and our model.

Citation

Acknowledgements

The website template was borrowed from here.